环境:

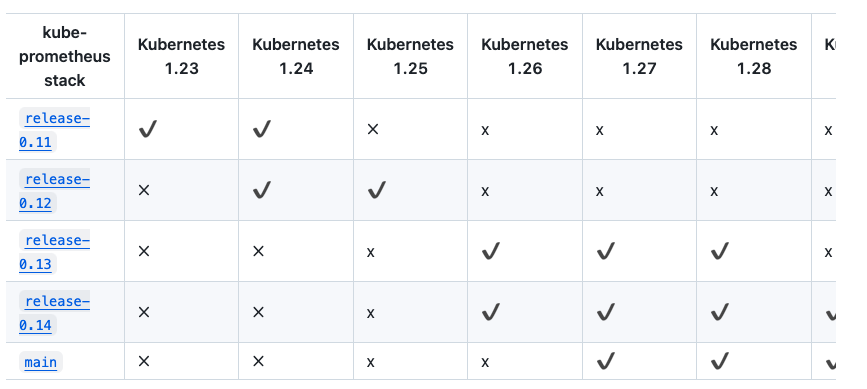

本次使用的k8s版本是v1.30,所以能选择kube-prometheus:v0.14和main的版本。

注意kube-prometheus的版本和k8s的版本相对应

下载链接:https://github.com/prometheus-operator/kube-prometheus

下载

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.14.0.tar.gz

解压后准备安装。

安装

[root@k8s-master ~]# kubectl create -f kube-prometheus-0.14.0/manifests/setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

[root@k8s-master ~]# kubectl create -f kube-prometheus-0.14.0/manifests/

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes-darwin created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

查看prometheus的资源详情

- 有两个镜像不是很好下载

- 一个是kube-prometheus-0.14.0/manifests/prometheusAdapter-deployment.yaml里的镜像:prometheus-adapter:v0.12.0

- 另一个是kube-prometheus-0.14.0/manifests/kubeStateMetrics-deployment.yaml里的:kube-state-metrics:v2.13.0

- 去外网下载完成给镜像加一个标签

- docker tag prometheus-adapter:v0.12.0 registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.12.0

- docker tag kube-state-metrics:v2.13.0 registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.13.0

- 下载完成后可以把镜像通过 docker save -o <image.tar> image_name:tag 保存到本地

- 然后通过scp 拷贝到其他机器,通过 docker load -i <image.tar> 还原镜像

ubuntu@master:/data/prometheus/kube-prometheus-0.14.0$ kubectl get -n monitoring all NAME READY STATUS RESTARTS AGE pod/alertmanager-main-0 2/2 Running 0 2d18h pod/alertmanager-main-1 2/2 Running 0 2d18h pod/alertmanager-main-2 2/2 Running 0 2d18h pod/blackbox-exporter-74465f5fcb-n6td8 3/3 Running 0 2d18h pod/grafana-b4bcd98cc-qk86r 1/1 Running 0 2d18h pod/kube-state-metrics-69bc7cdcd4-7xn59 3/3 Running 0 2d15h pod/node-exporter-2fffb 2/2 Running 0 2d18h pod/node-exporter-5xgt2 2/2 Running 0 2d18h pod/node-exporter-l6tp5 2/2 Running 0 2d18h pod/node-exporter-lt9k8 2/2 Running 0 2d18h pod/prometheus-adapter-c7f899ccd-8xvhs 1/1 Running 0 2d15h pod/prometheus-adapter-c7f899ccd-9jztr 1/1 Running 0 2d15h pod/prometheus-k8s-0 2/2 Running 0 2d18h pod/prometheus-k8s-1 2/2 Running 0 2d18h pod/prometheus-operator-6f948f56f8-wgc42 2/2 Running 0 2d18h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/alertmanager-main NodePort 10.98.35.40 <none> 9093:30393/TCP,8080:31280/TCP 2d18h service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 2d18h service/blackbox-exporter ClusterIP 10.107.113.207 <none> 9115/TCP,19115/TCP 2d18h service/grafana NodePort 10.102.41.170 <none> 3000:30300/TCP 2d18h service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 2d18h service/node-exporter ClusterIP None <none> 9100/TCP 2d18h service/prometheus-adapter ClusterIP 10.108.172.76 <none> 443/TCP 2d18h service/prometheus-k8s NodePort 10.109.60.230 <none> 9090:30390/TCP,8080:31065/TCP 2d18h service/prometheus-operated ClusterIP None <none> 9090/TCP 2d18h service/prometheus-operator ClusterIP None <none> 8443/TCP 2d18h NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/node-exporter 4 4 4 4 4 kubernetes.io/os=linux 2d18h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/blackbox-exporter 1/1 1 1 2d18h deployment.apps/grafana 1/1 1 1 2d18h deployment.apps/kube-state-metrics 1/1 1 1 2d18h deployment.apps/prometheus-adapter 2/2 2 2 2d18h deployment.apps/prometheus-operator 1/1 1 1 2d18h NAME DESIRED CURRENT READY AGE replicaset.apps/blackbox-exporter-74465f5fcb 1 1 1 2d18h replicaset.apps/grafana-b4bcd98cc 1 1 1 2d18h replicaset.apps/kube-state-metrics-59dcf5dbb 0 0 0 2d18h replicaset.apps/kube-state-metrics-69bc7cdcd4 1 1 1 2d15h replicaset.apps/prometheus-adapter-5794d7d9f5 0 0 0 2d18h replicaset.apps/prometheus-adapter-c7f899ccd 2 2 2 2d15h replicaset.apps/prometheus-operator-6f948f56f8 1 1 1 2d18h

修改service配置以满足集群外访问(默认安装后只能集群内访问)

1、修改grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 11.2.0

name: grafana

namespace: monitoring

spec:

ports:

- name: http

nodePort: 30300 #添加

port: 3000

targetPort: http

type: NodePort #添加

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

2、修改alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

name: alertmanager-main

namespace: monitoring

spec:

ports:

- name: web

nodePort: 30393 #添加

port: 9093

targetPort: web

- name: reloader-web

port: 8080

targetPort: reloader-web

type: NodePort #添加

selector:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

3、修改prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.54.1

name: prometheus-k8s

namespace: monitoring

spec:

ports:

- name: web

nodePort: 30390 #添加

port: 9090

targetPort: web

- name: reloader-web

port: 8080

targetPort: reloader-web

type: NodePort #添加

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

应用修改后的文件:

kubectl apply -f kube-prometheus-0.14.0/manifests/

alertmanager.monitoring.coreos.com/main unchanged

networkpolicy.networking.k8s.io/alertmanager-main unchanged

poddisruptionbudget.policy/alertmanager-main configured

prometheusrule.monitoring.coreos.com/alertmanager-main-rules unchanged

secret/alertmanager-main configured

service/alertmanager-main configured

serviceaccount/alertmanager-main unchanged

servicemonitor.monitoring.coreos.com/alertmanager-main unchanged

clusterrole.rbac.authorization.k8s.io/blackbox-exporter unchanged

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter unchanged

configmap/blackbox-exporter-configuration unchanged

deployment.apps/blackbox-exporter unchanged

networkpolicy.networking.k8s.io/blackbox-exporter unchanged

service/blackbox-exporter unchanged

serviceaccount/blackbox-exporter unchanged

servicemonitor.monitoring.coreos.com/blackbox-exporter unchanged

secret/grafana-config configured

secret/grafana-datasources configured

configmap/grafana-dashboard-alertmanager-overview unchanged

configmap/grafana-dashboard-apiserver unchanged

configmap/grafana-dashboard-cluster-total unchanged

configmap/grafana-dashboard-controller-manager unchanged

configmap/grafana-dashboard-grafana-overview unchanged

configmap/grafana-dashboard-k8s-resources-cluster unchanged

configmap/grafana-dashboard-k8s-resources-multicluster unchanged

configmap/grafana-dashboard-k8s-resources-namespace unchanged

configmap/grafana-dashboard-k8s-resources-node unchanged

configmap/grafana-dashboard-k8s-resources-pod unchanged

configmap/grafana-dashboard-k8s-resources-workload unchanged

configmap/grafana-dashboard-k8s-resources-workloads-namespace unchanged

configmap/grafana-dashboard-kubelet unchanged

configmap/grafana-dashboard-namespace-by-pod unchanged

configmap/grafana-dashboard-namespace-by-workload unchanged

configmap/grafana-dashboard-node-cluster-rsrc-use unchanged

configmap/grafana-dashboard-node-rsrc-use unchanged

configmap/grafana-dashboard-nodes-darwin unchanged

configmap/grafana-dashboard-nodes unchanged

configmap/grafana-dashboard-persistentvolumesusage unchanged

configmap/grafana-dashboard-pod-total unchanged

configmap/grafana-dashboard-prometheus-remote-write unchanged

configmap/grafana-dashboard-prometheus unchanged

configmap/grafana-dashboard-proxy unchanged

configmap/grafana-dashboard-scheduler unchanged

configmap/grafana-dashboard-workload-total unchanged

configmap/grafana-dashboards unchanged

deployment.apps/grafana configured

networkpolicy.networking.k8s.io/grafana unchanged

prometheusrule.monitoring.coreos.com/grafana-rules unchanged

service/grafana configured

serviceaccount/grafana unchanged

servicemonitor.monitoring.coreos.com/grafana unchanged

prometheusrule.monitoring.coreos.com/kube-prometheus-rules unchanged

clusterrole.rbac.authorization.k8s.io/kube-state-metrics unchanged

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics unchanged

deployment.apps/kube-state-metrics unchanged

networkpolicy.networking.k8s.io/kube-state-metrics unchanged

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules unchanged

service/kube-state-metrics unchanged

serviceaccount/kube-state-metrics unchanged

servicemonitor.monitoring.coreos.com/kube-state-metrics unchanged

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules unchanged

servicemonitor.monitoring.coreos.com/kube-apiserver unchanged

servicemonitor.monitoring.coreos.com/coredns unchanged

servicemonitor.monitoring.coreos.com/kube-controller-manager unchanged

servicemonitor.monitoring.coreos.com/kube-scheduler unchanged

servicemonitor.monitoring.coreos.com/kubelet unchanged

clusterrole.rbac.authorization.k8s.io/node-exporter unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-exporter unchanged

daemonset.apps/node-exporter unchanged

networkpolicy.networking.k8s.io/node-exporter unchanged

prometheusrule.monitoring.coreos.com/node-exporter-rules unchanged

service/node-exporter unchanged

serviceaccount/node-exporter unchanged

servicemonitor.monitoring.coreos.com/node-exporter unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-k8s unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

networkpolicy.networking.k8s.io/prometheus-k8s unchanged

poddisruptionbudget.policy/prometheus-k8s configured

prometheus.monitoring.coreos.com/k8s unchanged

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

service/prometheus-k8s configured

serviceaccount/prometheus-k8s unchanged

servicemonitor.monitoring.coreos.com/prometheus-k8s unchanged

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator unchanged

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources unchanged

configmap/adapter-config unchanged

deployment.apps/prometheus-adapter configured

networkpolicy.networking.k8s.io/prometheus-adapter unchanged

poddisruptionbudget.policy/prometheus-adapter configured

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader unchanged

service/prometheus-adapter unchanged

serviceaccount/prometheus-adapter unchanged

servicemonitor.monitoring.coreos.com/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator unchanged

deployment.apps/prometheus-operator unchanged

networkpolicy.networking.k8s.io/prometheus-operator unchanged

prometheusrule.monitoring.coreos.com/prometheus-operator-rules unchanged

service/prometheus-operator unchanged

serviceaccount/prometheus-operator unchanged

servicemonitor.monitoring.coreos.com/prometheus-operator unchanged

删除NetworkPolicy

- prometheus operator内部默认配置了NetworkPolicy,需要删除其对应的资源,才可以通过外网访问

kubectl delete -f manifests/prometheus-networkPolicy.yaml

kubectl delete -f manifests/grafana-networkPolicy.yaml

kubectl delete -f manifests/alertmanager-networkPolicy.yaml

结束。

其实官方是推荐通过ingress访问,你需要配置域名,配置ingress转发器。

我并未去进行实验,因为在测试环境。如果是生产环境推荐使用。

下面贴个链接

https://blog.csdn.net/u011709380/article/details/136382904

后期会更新,添加报警相关的配置修改。

评论区